The Future of OT Security

Focusing primarily on the process manufacturing industry, this article unpacks the evolution and future of OT Security.

Synopsis

Around the mid 1990s, the process manufacturing industry experienced a conceptual earthquake of sorts. Following eons of managing their supply chains as what could best be called “supply-driven supply chains,” new and commercially viable computing and communication technologies allowed process manufacturing enterprises to connect the decision-support tools (and hence processes) along the supply chain, in both directions. The result was the birth of a radically different way to manage supply chains: as “demand-driven supply chains,” which fuelled a wave of investment in digital transformation initiatives attempting to monetise the new operating model.

But even as those massive investments were starting to yield significant gains, a dark new risk was lurking on the horizon, potentially threatening this landmark transformation… Seeded by CNN’s release of a footage of the DHS’s Project Aurora, in September 2007, and the uncovering of Stuxnet, 3 years later, the risk of cyberattacking industrial control environments was brought into sharp focus. And, in parallel, the discipline of OT Security was born in earnest, with the mission to address this new risk.

The discipline’s early constituents, both consultants and tech entrepreneurs, quickly adopted the simplistic Purdue Model as the tool to underpin their methodologies & design philosophies. Augmented with four new and highly restrictive “Commandments” – that we will discuss in this paper – the “Purdue Bible” was turned into the underlying framework for OT Security. The result was partly disconnecting the manufacturing supply chain along the Purdue Model’s demarcation lines, directly jeopardising the connectivity required to facilitate those digital transformation initiatives.

Yet, slow moving and highly risk averse as it is, the process industry – which already invested heavily in people, processes and technology to digitally transform its manufacturing supply chains – will surely not accept being deprived of reaping the benefits of its investment for too long. For several years the Purdue believers had the upper hand, convincing the leaders of the industrial enterprise to jump through hoops and partly put their digital transformation dreams on hold, in the name of cyber-securing their manufacturing assets. But under the surface, alternatives were brewing. Those alternatives are now coming of age. In particular that of the Open Process Automation™ Forum (OPAF), initiated by ExxonMobil nearly 6 years ago, has all the hallmarks of becoming the future standard in the process industry. Another alternative, offered by NAMUR – an international association of users of automation technology and digitisation in the process industry – is gaining traction as well. Such initiatives promise to redefine OT Security too, and usher in a wave of innovation, in which ICS/OT architectures will be designed and developed from the ground up with security in mind (aka “secure by design”), hence fitting today’s highly dynamic digital arena.

In this paper we will analyse the sources of our “original sin,” the drivers for change, and the fundamental developments, like OPAF and NAMUR, that are about to change the discipline of OT Security forever. And in so doing, we will also analyse the winners & losers that will undoubtedly emerge from such transformational shift.

Motivational Underpinning – A Brief History of Supply Chain Management

Around the mid-1990s, the process manufacturing industry experienced a conceptual earthquake. For as long as anybody could remember, practically from the Industrial Revolution and until the present day, process manufacturing enterprises managed their supply chains as what could best be called “supply-driven supply chains”: you manufacture as much and as efficiently as you can, store your products in stocks, and use your sales and marketing power to maximise selling the stuff you have in stock. The reason for managing the supply chain this way was mainly that this was practically the only possible way, given the technology available at the time; and with it, admittedly, the fact that customers were rather unsophisticated and were willing to go along with the “supply push”.

It should be noted that the discrete manufacturing segment of the industry started experimenting with Just-In-Time (JIT) manufacturing – a form of demand-driven management of production – already in the early 1970s, when Taiichi Ohno led the implementation of this approach within Toyota’s manufacturing plants in Japan; and later spread across multiple Japanese discrete manufacturing companies. But the process industry didn’t follow this transformation, due to the lack of adequate decision-support and communication technologies to support it.

All this started changing when new and commercially viable computing and communication technologies appeared in the early-1990s, that allowed the process manufacturing enterprise to connect its decision-support tools (and hence processes) along its supply chain – in both directions. In parallel, customers became far more sophisticated, and started demanding to get what they wanted – and when they wanted it. It’s hard to say which of those two was the chicken and which the egg; it seems that both processes ignited simultaneously and began amplifying each other in tandem. The result, however, was the birth of a radically different way to manage supply chains: demand-driven supply chain management.

This fundamental shift led to the creation of new business processes, such as Enterprise Resource Planning (ERP), Manufacturing Execution Planning (MEP/MES), Demand Forecasting, Demand Management, Demand Fulfilment, etc. And in parallel, the advent of technology companies focused on providing the decision support tools for those new processes: SAP, Oracle, Salesforce, i2 Technologies, Manugistics, and many others.

Deploying the new decision support technology to the new business processes, manufacturing enterprises (both process and discrete) embarked on a massive transformation process, turning the management of their supply chains on its head.

This process was put on steroids during the dot-com era, when technology companies fueled it with an unparalleled wave of innovation, in both segments of B2C and B2B (and its later extension to B2B2C). As a result, towards the turn of the millennium demand-driven supply chain management was all the rage in the manufacturing industries, with projects budgeted at tens and even hundreds of millions of dollars springing up across the industrial space.

Slowly but surely, the manufacturing sector started seeing returns on the massive investment, in the form of smoothly connected supply chain management frameworks, indeed driven from the demand side and propagating demand signals all the way down to the manufacturing floor (and in some cases sideways too, to 3rd party suppliers). The story of Dell Technologies is perhaps the best example, where the implementation of its Direct-to-Consumer and Zero Inventory strategies (the ultimate demand-driven supply chain) catapulted the company to become market leader in the consumer electronics sector.

While consumer electronics was indeed a first-mover sector, the trend spread across the broader manufacturing industry. In the Oil & Gas sector, for example, Shell pioneered, towards the end of the 1990s, the novel concept of demand-driven supply chain management in its global downstream business; it was later followed by others in the O&G sector. Overall, the process peaked early in the 21st century, albeit with a temporary slowdown during the burst of the dot-com bubble, around the turn of the millennium.

But even as those massive investments were starting to yield significant gains, a dark new risk was lurking on the horizon…

The original sin

In late September, 2007, CNN released a footage of the DHS’ Project Aurora, showing in broad daylight the feasibility of using a cyber attack to cause a 2.25MW diesel generator to self-destruct. Suddenly, the imminent risk of cyberattacking Industrial Control Systems (ICS) pivoted to the agendas of cybersecurity professionals, albeit still at a rather theoretical level. The theory turned into sharp reality 3 years later, with the uncovering of Stuxnet – a 500KB computer worm that has likely destroyed over 1000 centrifuges at the Natanz uranium-enrichment plant in Iran. Following which the cyber risk to ICS and SCADA networks got firmly on the table, and the discipline of OT Security was born in earnest.

Sensing the opportunity, two “armies” started marching on, simultaneously, to staging positions: An army of consultants, gearing up to confront their clients with the new and now imminent risk (which was true) and convincing them that they know what to do to address it (which wasn’t, yet). And an army of tech entrepreneurs, gearing up to face their potential venture capital investors and convincing them of the same new and imminent risk and, indeed, that they have, albeit in theory, the technology needed to mitigate it (they similarly had only vague ideas at that point).

Alas, both armies – and especially their generals – had a problem. Note that almost all those generals came from the relatively well-understood world of Information (i.e., IT) Security; either leading consulting engagements for banks and the likes, or developing software solutions to protect IT networks. As such, they all lacked the necessary understanding of production environments and process control systems. So they were in a desperate need of a good theoretical, scientific framework – preferably a simple one – with which they could equip their soldiers heading to battle; so that they would look convincing in making their arguments about their ability to address the risk professionally.

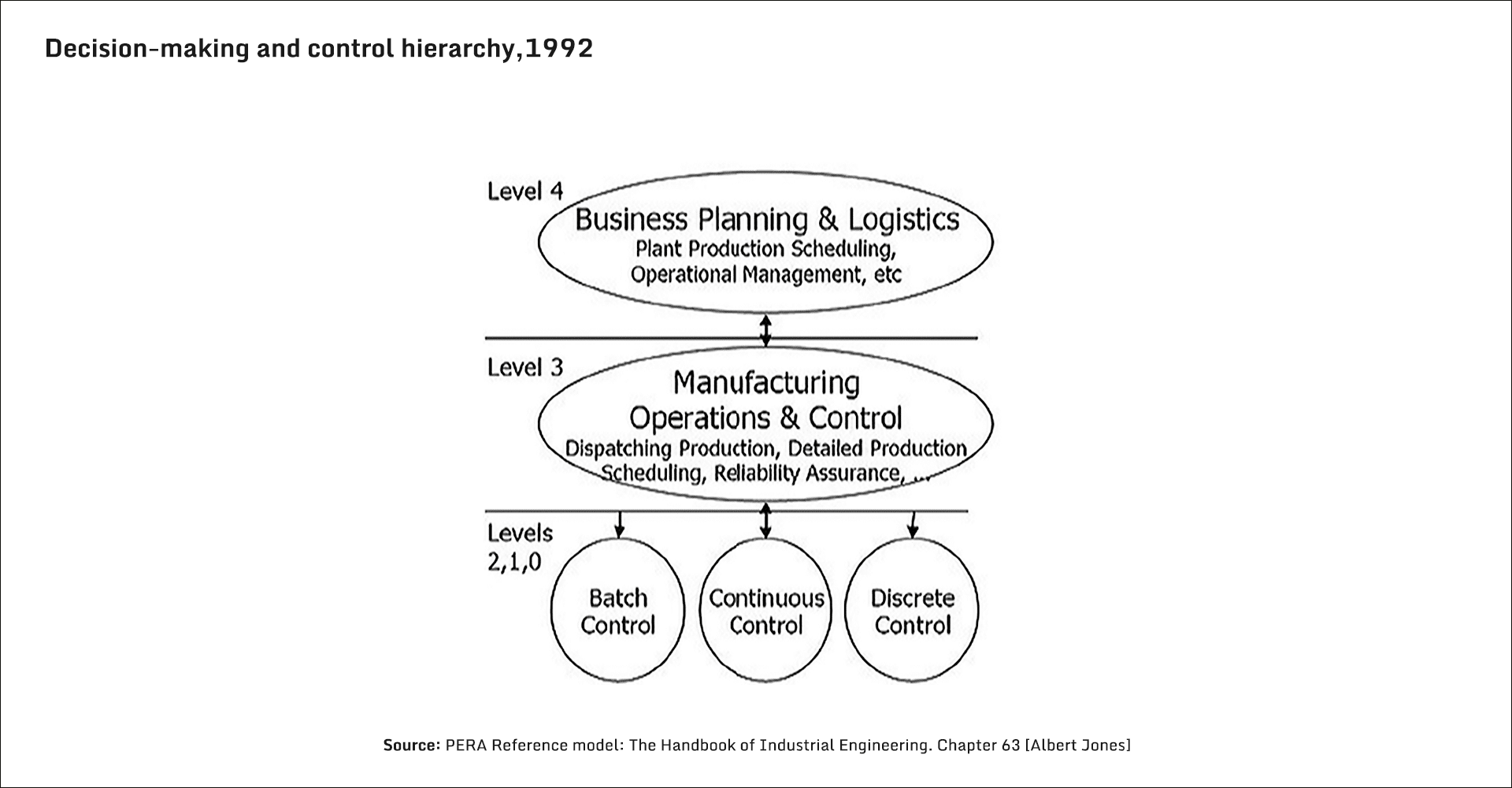

The purdue bible

While searching for such a framework, they had an epiphany of sorts and discovered, deep in the nearly forgotten sea of academic obscurity, the perfect tool for their purpose: the Purdue Model (Figure 1). It was truly a godsend, for both armies. It had a big name behind it and a scientific flair to boot, coming from a reputable research university in West Lafayette, Indiana. And it was dead simple; you can’t have it any simpler: The technology landscape of the industrial enterprise could be modelled in 5 simple layers: the physical process layer at Level-0; the basic process control (PLCs, sensors, actuators, etc) at Level-1; real-time supervisory control (HMIs, DCSs, SCADAs) at Level-2; manufacturing operations (MES, Historians, APCs, etc) at Level-3; and the business logistics and enterprise planning & scheduling decision support at Level-4. How beautiful. Clearly any consultant or tech entrepreneur could easily present this to his/her target audience with full confidence, coming across as an authority in the field!

And indeed, soon enough the Purdue Model became a standard underpinning, to be found anywhere between slides #3 and #8 of any presentation given on the topic, pretty much everywhere around the world.

True to their mission, the consultants and the tech entrepreneurs didn’t let some disturbing facts confuse them of course! Facts such as that this framework was developed some 20-25 years earlier (in 1992); for a totally different purpose (a high-level reference architecture model for the industrial enterprise); and in a world where there was still no internet in the equation… All those minor facts were happily overlooked, as they couldn’t stand a chance against the advantages this simplistic model had brought to its new cohort of believers.

The First Commandment

Having a “deeper” look at the Purdue Model, the consultants quickly discovered something interesting: All the lower 4 layers of the model – Level-0 to Level-3 – involved actual manufacturing processes and hardware that were physically located on-premise, either at the production site (Levels 0 & 1) or in control rooms (Levels 2 & 3). While the more advanced decision support tools, of Level-4, were typically located back-office, off-site, and in many cases even in different geographic locations altogether.

This was an amazing discovery, which also suggested a relatively simple solve-all solution: Since production sites are fairly well protected, physically, disconnecting (dataflow-wise) Levels 0-3 from Level-4 would make the ICS/OT section “air-gapped”, and hence resolve the problem “by definition”, at least to a large extent (excluding insider-threats).

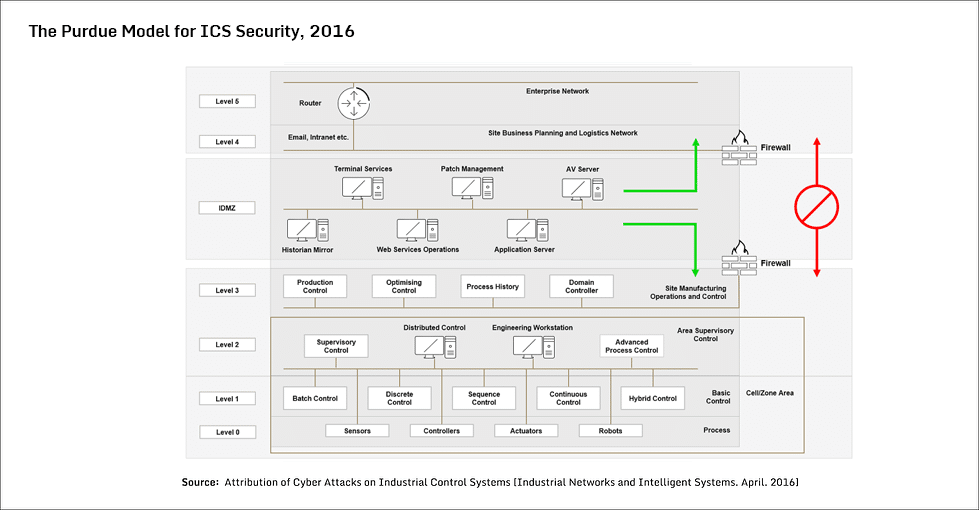

Which led to the consultants announcing their First Commandment in the Purdue Bible: “Thou shalt not transfer data directly between Level-4 and Level-3”.

And to support it, added to the original Purdue Model a demilitarised zone – a concept borrowed from traditional IT networks at the time – between Level 3 and Level 4 (aptly referred to as Level 3.5). This “Industrial Demilitarised Zone” (IDMZ), aka the Perimeter Network, provided a layer of defence to securely share ICS data and network services from the industrial zone (Level 0-3) with the enterprise zone (Level 4). But equally importantly, it made the good-old reference model of Purdue look far more like a scientific cybersecurity diagram (Figure 2).

As a result, “Perimeter Security” became the core component of the solution offered by consultants to their clients in the early 2010s, backed, of course, by the scientific Purdue Model.

It is very important to note here that implementing such Perimeter Security, by separating (theoretically) the IT from the OT layer, risked invoking a false impression of implementing proper Network Segmentation – which is indeed one of the important concepts of a secure ICS/OT network architecture. Note, however, that this separation on the IT-OT boundary (i.e., between Levels 3 and 4) is merely implementing the most basic Network Segmentation; a lot more architectural design is required to achieve appropriate and secure network segmentation.

The Second Commandment

Having established the IDMZ as a perimeter security concept, the consultants looked deeper still at their Purdue Model, and discovered that, alas, the original model (mind you, it was developed in 1992) had no representation whatsoever of the Internet, the Web, IoT, etc, and of their related enterprise applications!

But they obviously didn’t let this deter their determination. Very quickly another layer was added to the original model – Level-5 – which included all the internet related enterprise applications (see Figure 2 above).

And with it came the announcement of the Second Commandment: “Thou shalt not use the internet on the manufacturing floor”.

It didn’t matter that at that point in time the Cloud was already the king-in-the-making, and IoT & I-IoT started proliferating at an exponential rate. When people (myself included, I must admit) literally shouted that this is conceptually wrong – the internet, the Cloud and IoT ARE already on the production floor, and their presence will only become more prevalent in the near future – the consultants brought up the Purdue Bible, indicated that all those are clearly part of Level-5, aren’t they? i.e., above Level-4; and pointed us sheepishly to the First Commandment, which prevented any direct data link between Level-4 (and above) and Level-3 (and below)…

The Third & Fourth Commandments

At the other battlefront, the tech entrepreneurs saw all this and realised they obviously have to abide by the two Commandments of their fellow consultants.

Not to be outdone, however, they quickly added their own contribution to the Purdue Bible, announcing the Third Commandment: “Thou shalt only operate on-prem”; i.e., in the closed, air-gapped universe defined by Levels 0-3 of the Purdue Model.

That’s great, they said; but after having a second look at their new battlefield, they quite obviously started asking themselves: “but wait a second; what could we do if our universe is limited to Levels 0-3?…” (theoretically; in practice very few tech innovators braved looking below Level 2). Well, not much really…

And up came their Fourth Commandment: “Thou shalt only develop Intrusion Detection Systems (IDS)”. And when they later discovered that doing Intrusion Detection in an ICS environment is far from trivial, in fact it is very complex indeed, and not necessarily yielding successful results, they amended the Fourth Commandment, and added to it the addendum “… and Asset Discovery”. Which indeed became the most selling functionality of their software solutions, now eagerly promoted by the professional services community, realising the simplicity in selling such service (very important by its own right!) to their clients.

Those guidelines quickly had any further digitisation of the manufacturing floor in the crosshairs. With the first casualty being the ongoing implementation of demand-driven supply chain management processes (ref. our Motivational Underpinning above), that – as we’ve seen – required massive digital communication between various decision support tools for demand planning and fulfilment back-office (Levels 4-5), and manufacturing operations & execution on-prem (Levels 0-3); all obviously in violation of the Purdue Bible’s First & Second Commandments. In addition, and equally importantly, the Industry 4.0 wave of digital transformation now had to progress carefully and slowly in the process industry; since it, too, relied heavily on Industrial IoT, with ample Cloud services to boot…

And so, here we are, my friends, heading into 2023, having to admit – as an ecosystem – to committing the Original Sin, by adopting the Purdue Model as our sole underlying framework for cybersecurity of ICS and OT… And, consequently, take responsibility for the two major drawbacks that resulted from our sin: (i) the adherence to the first two Commandments in our Purdue Bible disconnected the manufacturing supply chain, depriving the industrial enterprise from reaping the real benefits of true digital transformation on the manufacturing floor, and of its decade-long investment in turning its supply chains into demand driven; and (ii) the adherence to the last two Commandments blocked, or at least severely slowed down, true technology innovation in the space of OT Security for nearly 10 years!

The Future of OT Security

All this is about to change. For one very simple reason: At the end of the day, one doesn’t become an Executive at e.g., ExxonMobil to so easily accept “this is impossible” for an answer…

And – after the process industry had invested so heavily in people, processes and technology to eventually turn its supply chains on their heads, and steer and manage them from the demand side, the industry will not accept being deprived of reaping the benefits from this digital transformation for too long.

True, the process industry is slow moving and highly risk averse. So for several years the Purdue believers had the upper hand, convincing the leaders of the industrial enterprise to put their digital transformation dreams on hold, in the name of cyber-securing their manufacturing assets. But under the surface, things were brewing; albeit at the pace (but also consistency) so typical to the process industry. The topic started being discussed in professional groups and conferences, and forums started forming, with the unstated mission to reclaim the industry’s independence to expedite its digital transformation processes and resurrect its demand-driven supply chain management, also in the face of cyber risks.

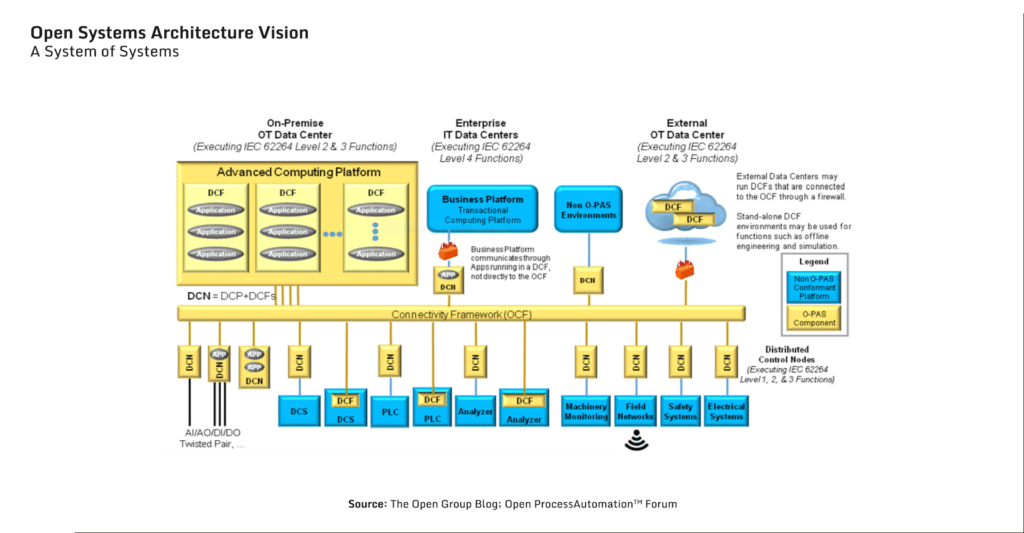

Chief among them – in my opinion the one with, by far, the best chances to succeed today – was an initiative started by ExxonMobil back in early 2017, called OPAF – the Open Process Automation™ Forum (part of The Open Group). One of its missions – and that of similar forums (see Info-Box below) – was simple, even though not stated in those exact words: To replace the dogmatic and overly simplistic Purdue Model with a new model in the zeitgeist of today’s highly dynamic digital arena, to be designed from the ground up to fit into the world of Cloud and IoT, and with security in mind (aka “secure by design”). The Forum quickly came up with its O-PAS standard, now already in its version 2.1 release (Figure 3).

True to its vision, it is, indeed, a fitting model for the modern digital age, also for cybersecurity (even though it wasn’t the prime objective).

At its core it has edge-devices, called DCNs – Distributed Control Nodes – that are small, low-cost, I/O and process control components that could perform control functions (including complete process control loops) – hence in principle replacing traditional PLCs – and support device configuration and device management. But – perhaps most importantly (for us, the OT Security professionals) – being an edge-device, it could also run applications, perform continuous device monitoring and diagnostics, and manage communication securely between devices, IoTs and higher layers, including the Cloud. All the above is to be deployed at scale – note that there could be hundreds of DCNs in a typical process unit, and an order of magnitude more in a large manufacturing complex. But while perhaps unimaginable in a traditional process control setting, such scale could be achieved today with e.g., Docker, or other Container development tools

NAMUR’s Alternative Initiative

As indicated above, OPAF is not the only industry initiative with the same objective of developing an open, integrated, and secure industrial control architecture. Another successful initiative is coordinated by NAMUR (an international association of users of automation technology and digitisation in the process industry, based in Germany). NAMUR has create a similar initiative to evolve the Process Industrial Automation architecture into a world of new connectivity, called NOA (NAMUR Open Architecture). Similar to OPAF’s DCN concept, NOA is implementing the Module Type Package (MTP)/Modular Automation concept (also promoted by ABB & Siemens). It has already initiated several trials, e.g., BASF’s OPA demonstrator for the chemicals industry, which uses MTP-compliant technology based on the NOA programme. For the purpose of simplicity of this article – and because I foresee future convergence of the NOA concept into the O-PAS framework – I will continue using O-PAS as my leading example.

To allow for a (likely long) transition period, virtual DCNs would interface with existing physical devices, acting as a “wrapper” around legacy PLCs, DCSs, etc, and landing them their communication and security functionality.

As such, an O-PAS based architecture allows secure data exchange between DCNs, but also with non-O-PAS complaint DCSs, PLCs and I/O devices.

As such, an O-PAS based architecture allows secure data exchange between DCNs, but also with non-O-PAS complaint DCSs, PLCs and I/O devices.

The entire communication takes place over a common bus – the OPA Connectivity Framework (OCF). This bus is based on the OPC UA communication standard (see Info-Box), that allows the gateway DCNs to provide the raw process data to the Real-Time Advanced Computing (RTAC) platform for further data processing. All this data orchestration and system management is handled by the O-PAS System Management (OSM), which is basically the brain behind the entire O-PAS architecture.

Since also the rest of the IT universe communicates with the DCNs over the same single bus, OPAF calls its standard the “Standard of Standards.” Which is rather presumptuous perhaps, but, well, describes it rather accurately, in view of the myriad of standards and protocols, open and propriety, deployed today in our aging ICS environments.

This article is not meant to be a detailed guide to the O-PAS standard; but ample references and analyses of it could be found on the net – which I certainly recommend investing your time in going through.

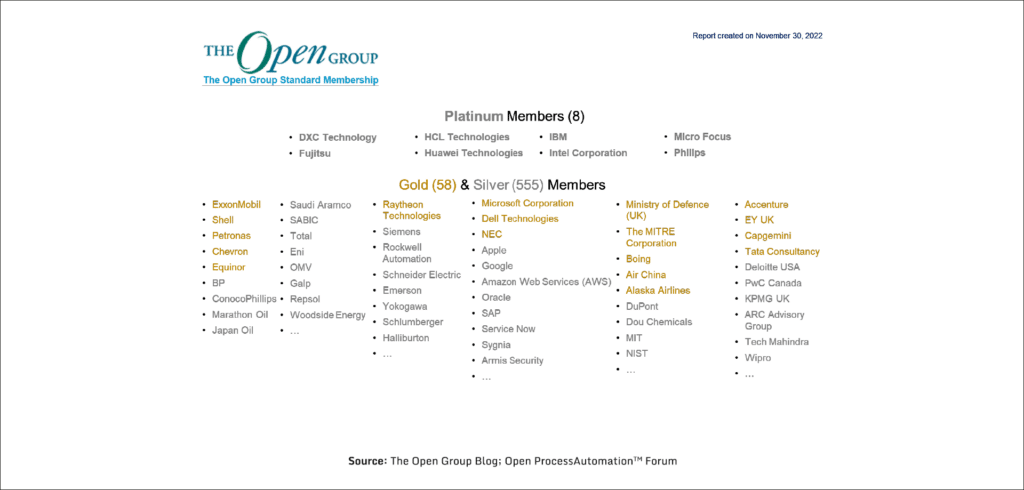

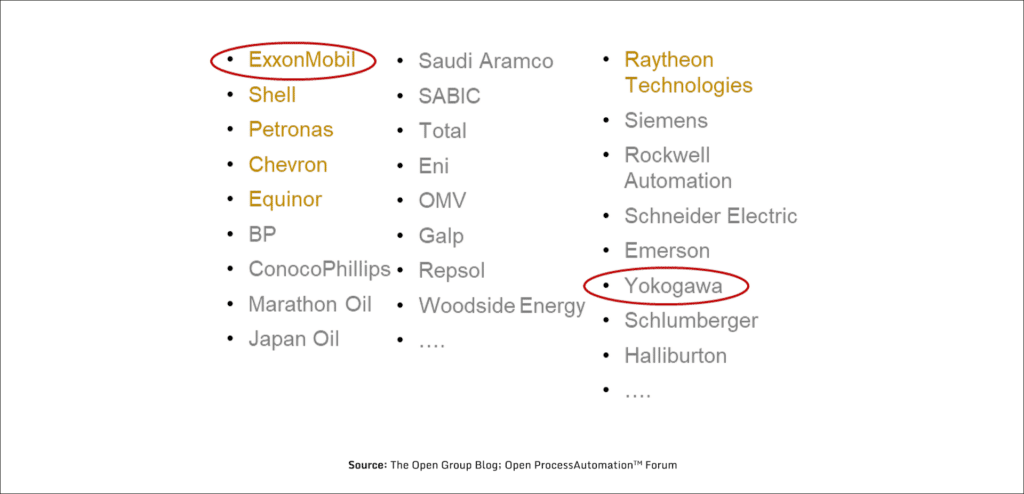

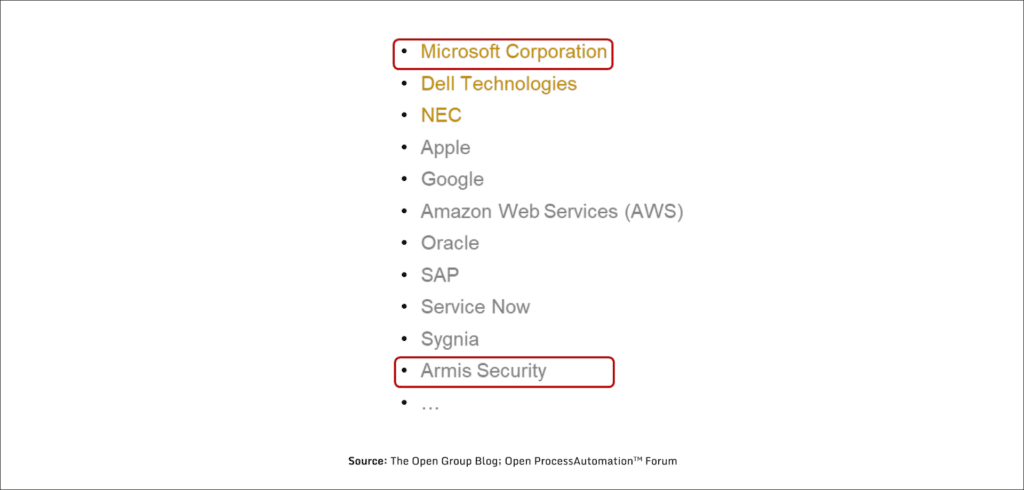

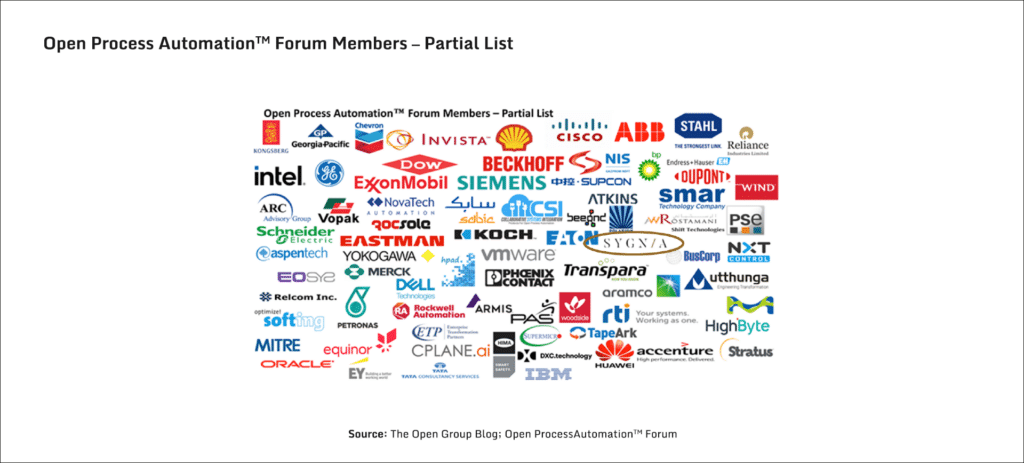

OPAF had a slow start, like many innovations in the process industry. But it is picking up steam. Today the forum counts nearly 900 associated entities (and over 600 active Members), including pretty much all the significant oil & gas multinationals – ExxonMobil, Shell, BP, Chevron, etc; all process control & automation vendors – Yokogawa, Siemens, Rockwell Automation, Schneider Electric, Emerson, etc; most of the leading software providers – Microsoft, Apple, Google, AWS, SAP, etc; all the leading consulting firms – Accenture, EY, Deloitte, KPMG, PwC; and many, many more (see Figure 4 for an illustrative sub-selection).

The OPC Unified Architecture (OPC UA)

OPC UA is a cross-platform, open-source, IEC62541 standard, developed by the OPC Foundation, for data exchange from sensors to Cloud applications. The goal in developing the OPC UA standard was to provide a platform-independent service-oriented architecture that would better meet the emerging needs of industrial automation. Its first version was released in 2006, after more than four years of specification work and prototype implementation. The current version of the specification, 1.04, was released in 2017, and supports publish/subscribe mode in addition to the client/server communications infrastructure. It is important to note that this communication standard, while being relatively new compared to the common standards used for process automation (some are decades old), doesn’t by itself guarantee cyber security! In this context it is interesting to note that an urgent security advisory released in April, 2022, by CISA – jointly with the U.S.’s DoE, NSA and FBI, and the commercial companies Dragos and Mandiant – warns that “certain advanced persistent threat (APT) actors have exhibited the capability to gain full system access to multiple industrial control system (ICS)/supervisory control and data acquisition (SCADA) devices.(*)” The list of devices impacted includes Schneider Electric MODICON and MODICON Nano PLCs, OMRON Sysmac NJ and NX PLCs, and… OPC Unified Architecture (OPC UA) servers! (*) The malware (perhaps better referred to as an ICS cyberattack platform) was named PIPEDREAM and Incontroller by Dragos and Mandiant respectively; and the threat actor behind it – assessed with high confidence to be a state actor – is tracked by Dragos as Chernovite.

Some O-PAS pilots went off in recent years, the last involving the development of an OPA Test Lab, operated by ExxonMobil Research & Engineering near Houston, TX, USA. Then, in early 2022, ExxonMobil announced that it is starting the first true, full-scale field trial of an Open Process Automation System designed to operate an entire production facility (with more than 2,000 I/Os), at an ExxonMobil manufacturing facility in the US Gulf Coast. The new system will be replacing the existing Distributed Control System (DCS) and Programmable Logic Controllers (PLCs) with a single, integrated system that meets the Open Process Automation Standard (O-PAS). ExxonMobil further selected the industrial automation and process control vendor Yokogawa as its system integrator for this undertaking. The field trial is scheduled for commissioning in 2023.

Others are starting with similar, albeit less ambitious projects. In the Middle East, an OPA Testbed is being built in collaboration between Saudi Aramco and Schneider Electric, the latter will also host the Test Lab at its Innovation & Research Centre in Daharan, Saudi Arabia. In Europe, Dow Chemical works on its MxD – an Open Architecture Testbed, which is reportedly being used to explore OPA along with digital twin concepts. Several other pilots are in advanced planning phases and are expected to be launched in the course of the coming year.

The process industry (being the process industry…) is carefully watching all this activity from the side-lines, albeit with ever increasing interest. Especially given that the first full-scale field trial involves the biggest Oil & Gas supermajor and a leading industrial automation powerhouse. Rest assured that as soon as success of the new concept is proven, the industry will jump en-masse into the game, also ushering in – in parallel – a new world of ICS/OT Security.

Winners and Losers

I have no doubt that the process industry is on the cusp of this (or similar) paradigm shift. The only question in my mind is how quickly it will start unfolding in earnest. Personally, I’d set my prediction at a rather conservative horizon of 2-4 years from now. Which means that now is the time to start getting prepared…

As such a tectonic shift in concept takes place, it will undoubtedly lead to winners and losers across the industrial battlefield. Let us go through some of the most notable of those (in my opinion at least), albeit briefly.

Decision Makers (and their Advisors)

At the enterprise decision-making level, the Executives of the process industry will win, without doubt; and get back the ability to resume digitally transforming their manufacturing operations and managing their supply chains in a demand-driven way. Just have a quick look at the member-list of OPAF (a small subset of which is shown in Figure 5 below, for illustration purposes), to realise the amount of influence they wield together in the industry. Among them, ExxonMobil – the driving force behind the OPAF initiative, and Yokogawa – the system integrator for its flagship project, stand to gain a significant first-mover advantage; which, if used smartly, will give both a rather luxurious starting point in the big race.

The industry Executives stand to win big also from a very important “side-effect” of such shift: IT/OT Convergence. For several years now IT/OT Convergence has been topping the agendas of trailblazing manufacturing enterprises, still with limited tangible results to show. One of the main reasons that such convergence is still being considered as a big challenge, despite the executive focus and priority it receives, is that to-date it was attempted top-down, conceptually – indeed adhering to the Purdue Model. I argue that the shift away from the Purdue Model to modern-day architectures such as O-PAS, will bring about IT/OT Convergence by default. A quick look at the Open Systems Architecture (ref Figure 3) reveals that it will support the IT/OT Convergence naturally and natively, building it bottom-up in a holistic way.

The consultants and services providers will see some losers among them, in particular those that opted to ignore the new developments, instead of mastering them and investing in making their service offerings ready for the future they usher in. Others would face a speed bump, being forced to adjust – on short notice – their sales pitches and methodologies to the will of their clients. But overall consultants are a resilient bunch and good learners; just give them some time and a good PowerPoint template and they’ll quickly rebuild their slide-decks and align them to the new reality… So no big worries for their position in the future of OT Security; this position, at least, is secured.

One segment of the cybersecurity services portfolio deserves a closer look though – that of the top-tier Incident Response (IR) providers. The sweeping adherence to the Purdue Model over the last decade, created a situation where also highly technical cybersecurity services (Incident Response, Threat Hunting, Breach Assessments, Red Teaming, etc) got fragmented into two specialised trades, for IT and for OT. A good example hereof is the response to the notorious ransomware attack on Colonial Pipeline in May, 2021, which required Colonial Pipeline to hire two top-tier IR specialists to advise it: FireEye/Mandiant for the IT part, and Dragos for the OT part. The anticipated shift away from the fragmented, Purdue-led thinking to a convergent IT/OT architecture, like O-PAS, will allow IR specialists to consolidate their methodologies, tools, and vast experience, and provide enterprise-wide Incident Response and related services as a one-stop shop offering. This will undoubtedly create a major opportunity for specialised IR providers at the top tier of the market.

| One of those top-tier IR companies that I believe stands to benefit most from such a paradigm shift is, of course, Sygnia – a high-end consulting and incident response company, which natively integrates its capabilities across the digital estate (IT & OT), and already provides enterprise-wide Incident Response services. On a personal note, my decision to join Sygnia several months ago, as VP OT Security, was also motivated by the above analysis and the conclusions I reached from it. |

Underlying Models

At long last, the Purdue Model will lose its central position as the Bible for OT Security, and will return to obscurity, featuring in a chapter on the history of process control in Process Control 101, with a mention of an interesting 10-year stint it had as a model for industrial cybersecurity…

O-PAS (or similar standards) will undoubtedly win, and become the new industry standard for process automation and hence the de-facto conceptual architectural framework for OT Security too.

Out of the Four Commandments in the by-then-obsolete Purdue Bible, only the first one – promoting network segregation & segmentation – will survive; albeit further nuanced to cover a true and complete segmentation (rather than a Perimeter Security based merely on IT/OT separation), and somewhat downgraded from being the core methodology to a very important best-practice. All the other three Commandments – not using the internet on the manufacturing floor; developing only on-prem cybersecurity technology solutions; and focusing solely on IDS and Asset Discovery functionality – will disappear, and – similar to the Purdue Model which was the underlying reason for them all – will become a chapter in the history books of OT Security.

Technology Solution Providers

The 64 thousand billion dollar question… 🙂 – the technology solution providers (aka “IDS Vendors for OT”). On the balance of things, they will mostly (but for a few, see below) enter this future in a challenging position, having for far too long restricted their product innovation to the narrow space defined by the last two Commandments of the Purdue Model: developing mostly on-prem, IDS & and Asset Discovery solutions. Some of them have already paid the price for this innovation myopia (or perhaps pragmatism), and were acquired at conservative valuations (very, imho) in a series of successive acquisitions: first Indegy, acquired by Tenable in late 2019 for $100-120M; then Cyberbit, acquired by Charlesbank Capital Partners for $48M, and CyberX, acquired by Microsoft for around $165M, both in mid-2020; and more recently Radiflow, acquired by Sabanci Group in April 2022 for $45M (for a 51% stake, plus an option to buy the remaining 49% by 2025). The remaining players in this dwindling field all seem sufficiently well-funded to remain in the game for the foreseeable future; but will be forced to channel a significant portion of their (investors’) funds to a massive investment in R&D, in an uphill catch-up game with the few that have seen all this coming and developed their solution strategy accordingly. One already started this process, namely Claroty, with a whopping $300M acquisition of Medigate in early 2022, and its subsequent impressive shift to the Cloud.

Two stand out in this crowd, imho: Microsoft, which – following its acquisition of CyberX in mid-2020 – repurposed its solution as “Azure Defender for IoT”, realising around the same time (and very accurately) that “the traditional ‘air-gapped’ model for OT cybersecurity had become outdated in the era of IT/OT convergence and initiatives such as Smart Manufacturing and Smart Buildings (while) the IoT and Industrial Internet of things (IIoT) are only getting bigger” [Phil Neray, Director of Azure IoT & Industrial Cybersecurity at Microsoft, Nov 2020]; and Armis Security, which had built its entire solution stack, from the ground up, to fit the new connected world of Cloud, IoT and OT. Armis Security’s stated mission, “to enable enterprises to adopt new connected devices without fear of compromise by cyberattacks”, perfectly matches the wishes of process industry Executives, and hence this shift is right up its alley.

And so, is it not surprising that the only two representatives of the “IDS Vendors for OT” in the Open Platform Automation™ Forum (OPAF) are indeed, Microsoft and Armis Security (see Figure 6). The rest, stuck perhaps too deep in Levels 0-3 of the Purdue Model ;-), either don’t consider it important enough, or, more disturbingly, this whole development and shift in market perception may have simply escaped their corporate attention.

Which is another reason why I believe that when the dust settles, those who prioritised actively partaking in shaping the Future of OT Security, in forums such as OPAF and NAMUR, will emerge as the winners. Just what we undertook doing at Sygnia, while joining OPAF in 2022 (see Figure 7).

A Closing Remark Regarding the National Cyber-Informed Engineering (CIE) Strategy

In June, 2022, as I was gearing up to start writing this paper, the Office of Cybersecurity, Energy Security, and Emergency Response at the U.S. Department of Energy (DoE) released its National Cyber-Informed Engineering (CIE) Strategy – the culmination of a major effort led by the Department’s Securing Energy Infrastructure Executive Task Force (SEI ETF).

The strategy encourages the adoption of a “security-by-design” mindset in the Energy sector (as well as in other critical infrastructures), i.e., building cybersecurity into the industrial control systems at the earliest possible stages rather than trying to “bolt on” cybersecurity to secure the critical systems after deployment. The framework further outlines five integrated pillars, offering a set of recommendations to incorporate CIE as a common practice across the energy and other critical infrastructure sectors: Awareness; Education; Development; Current Infrastructure; and Future Infrastructure. Specifically the fifth pillar – Future Infrastructure – advocates to “conduct R&D and develop an industrial base to build CIE into new infrastructure systems and emerging technology.”

The DoE’s Strategy paper is prescriptive in nature (perhaps too prescriptive, for such an important guidance that had spun 5 years of intensive work, involving dozens of domain experts). Had the task force opted for a more descriptive approach, I have absolutely no doubt that we would have seen elements of the Future of OT Security – as depicted in this article – popping up across its five pillars. Specifically, it doesn’t require much imagination to see that, in particular, the “Future Infrastructure” pillar is literally a direct call for the type of work done by OPAF and NAMUR, and for the development of conceptual architectures as those offered by their O-PAS & NOA respectively.

* Title photo courtesy of Bentley Systems

This advisory and any information or recommendation contained herein has been prepared for general informational purposes and is not intended to be used as a substitute for professional consultation on facts and circumstances specific to any entity. While we have made attempts to ensure the information contained herein has been obtained from reliable sources and to perform rigorous analysis, this advisory is based on initial rapid study, and needs to be treated accordingly. Sygnia is not responsible for any errors or omissions, or for the results obtained from the use of this Advisory. This Advisory is provided on an as-is basis, and without warranties of any kind.

| Disclaimer: OT Security is a broad discipline, covering a wide range of industrial and operational domains: from manufacturing, through oil & gas and energy, to transportation, logistics, healthcare, etc. While some of the principles discussed in this paper are applicable to OT Security across those domains, this article focuses primarily on the process industry segment of OT Security; which also includes most of the critical infrastructures: Oil & Gas, Chemicals, Power Generation, Water Resources, Mining, Pharmaceuticals, etc. |

By clicking Subscribe, I agree to the use of my personal data in accordance with Sygnia Privacy Policy. Sygnia will not sell, trade, lease, or rent your personal data to third parties.